About Me

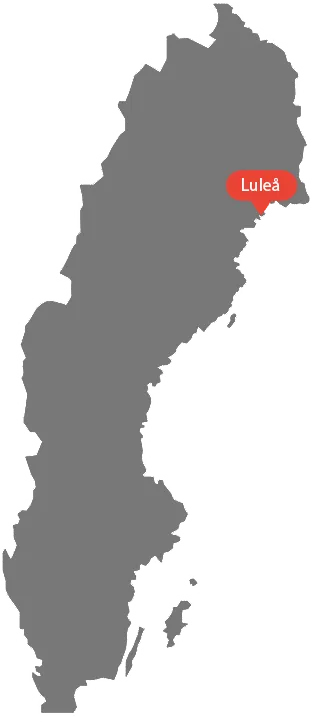

I’m a Software Developer and Consultant based in Luleå, Sweden, with a background in Software Engineering from Luleå University of Technology. Over the past several years, I’ve worked with everything from startups to government agencies, building scalable systems, leading teams, and delivering clean, reliable software that solves real problems.

As a developer, I specialize in Java, Python, and modern web technologies, with hands-on experience across backend services, cloud infrastructure, and front-end development. I’ve led projects through full lifecycles, from concept and architecture to deployment and long-term maintenance, always with an eye toward clarity, testing, and future-proofing.

I’m passionate about elegant, maintainable solutions and love finding ways to automate the boring parts of life. When I’m not coding, you’ll probably find me reading, gaming, or out on the tennis court.

Projects