Web Scrapers

Over the years I’ve built an enormous collection of scrapers to automate the information I care about most. They range from simple RSS feed aggregators to targeted utilities that watch for ISP outages or local store promotions. Most jobs run as cron tasks on Linux servers and feed a SQLite database that handles deduplication, scheduling, and delivery preferences.

These days most of them are piped through AI and LLM services to summarise content, extract key points, or reformat for easier reading.

Daily Newsletters

I have several scrapers that compile daily or weekly digests of curated content, that I usually read over breakfast and in the evenings after work.

-

Youtube Subscriptions — Scrapes my subscription list for new uploads, extracts the subtitles, pipes them through AI summarization, and compiles a digest.

-

Reddit Digest — Polls a curated subreddit list via the Reddit API, filters threads above configurable upvote thresholds, and emails a morning briefing. This scraper has run continuously for roughly six years and completely replaced daily manual browsing.

-

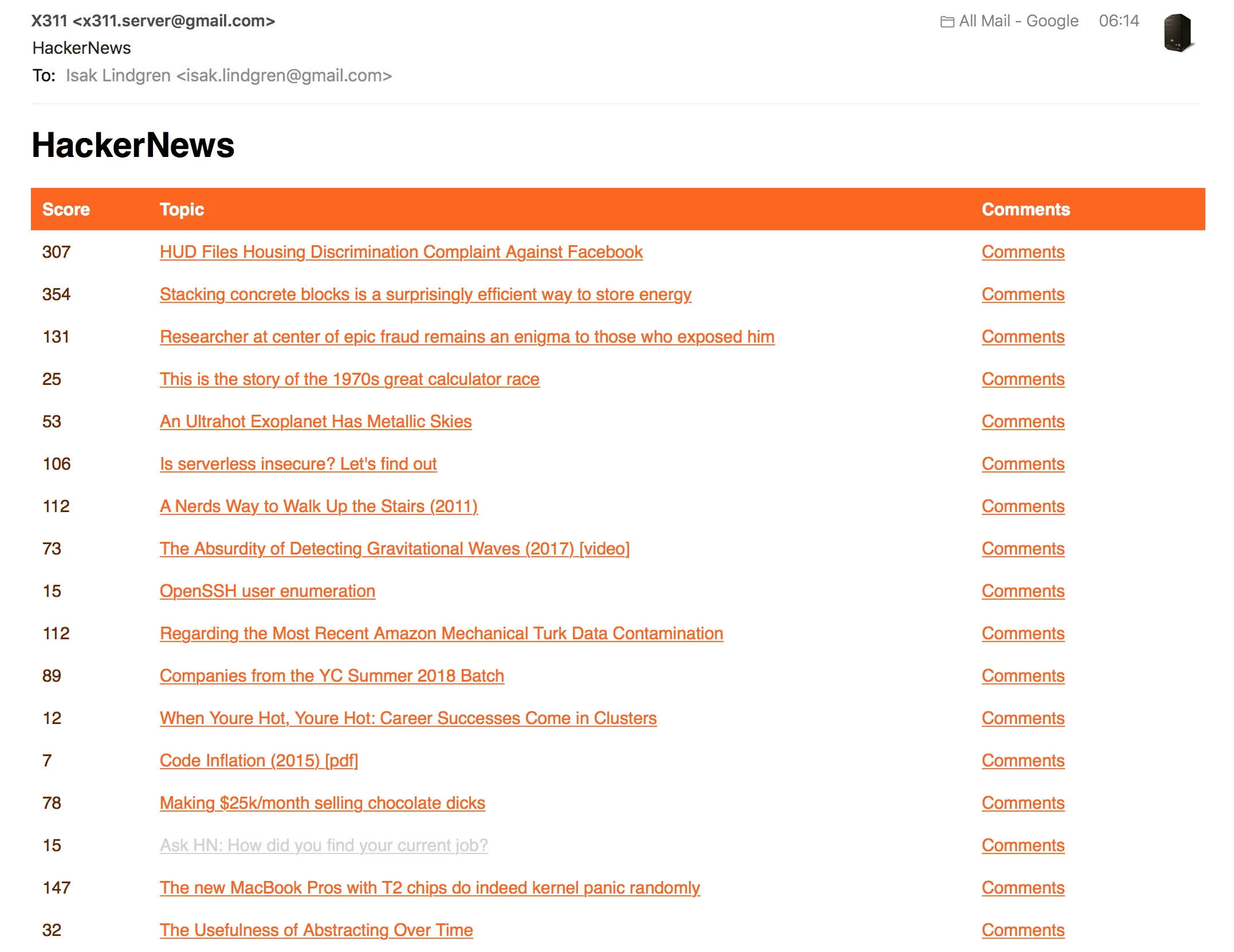

Hacker News Highlights — Mirrors the Reddit workflow but focuses on HN front-page retention so I never miss standout discussions.

-

RSS Collector — Consolidates dozens of feeds. Some are delivered immediately, while others are aggregated into a “next-morning” email. Keyword filters let me ignore noise or surface posts that match niche interests.

Long-Form Compilation

-

Web Serials to EPUB — Crawls serial fiction blogs, orders posts chronologically, and packages them into EPUB files for offline reading. SQLite records which chapters were already exported so new releases slot in seamlessly.

-

Web Comics to CBZ — Similar to the serial fiction tool, but tailored for webcomics. Downloads image assets, renames them for proper ordering, and zips them into CBZ archives compatible with my e-reader.

Tiny Monitors

- Convenience Stores — Uses wkhtmltopdf to grab visual snapshots of weekly flyers so I can skim sales quickly.

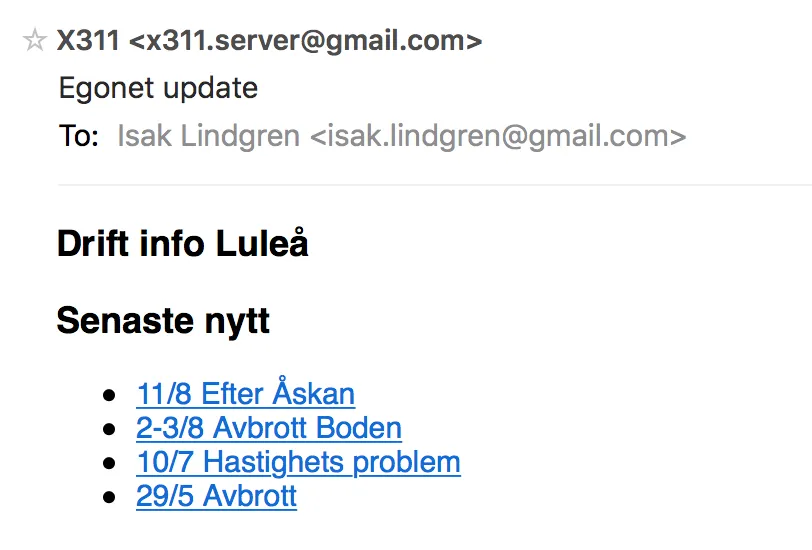

- ISP Status — Monitors my provider’s maintenance page and alerts me to outages or scheduled downtime the moment content changes.

- Deals & Sales — Watches specific retailers and enthusiast forums for limited-time offers, firing push notifications when rules match desired products.

- Game Releases — Tracks publisher RSS feeds, Steam announcements, and community calendars to notify me about launches the moment they go live.

Tooling Notes

- Java handles long-running collectors where concurrency and headless browser integrations matter

- Python plus BeautifulSoup accelerates quick one-off scrapers and prototyping

- SQLite stores scheduling metadata, cache busting tokens, and user preferences for each delivery channel